|

I am a research scientist at Huawei Noah’s Ark Lab. Prior to that, I received my Ph.D. degree from the School of Computer Science and Technology, Harbin Institute of Technology, Shenzhen (HITSZ), supervised by Prof. Zenglin Xu. I major in investigating combinations of tensor decomposition technique and deep neural networks on a variety of tasks, including model compression, efficient training, etc. Feel free to contact me! Intersts: Tensor Learning, Model Compression, Model Initialization, Training Efficiency. Email / CV / Google Scholar / Github |

|

|

|

02/2025: One paper is accepted in ICLR 2025.

12/2023: One paper is accepted in AAAI 2024. 09/2023: One paper is accepted in NeurIPS 2023. 02/2023: Publish a preprint about tensorial neural networks with a collection on the web. 05/2022: One paper is accepted in ICML 2022. 02/2022: Publish a Latex template paperlighter.sty for writing papers in a simple way. |

|

|

|

Reviewer, NeurIPS 2020-present

Reviewer, ICML 2021-present Reviewer, ICLR 2022-present |

|

|

|

Yu Pan, Chaozheng Wang, Zekai Wu, Qifan Wang, Min Zhang, Zenglin Xu, ICLR, 2025 abs / arXiv Using the identity matrix to construct an initialization method for fast and stable training of deep neural networks. |

|

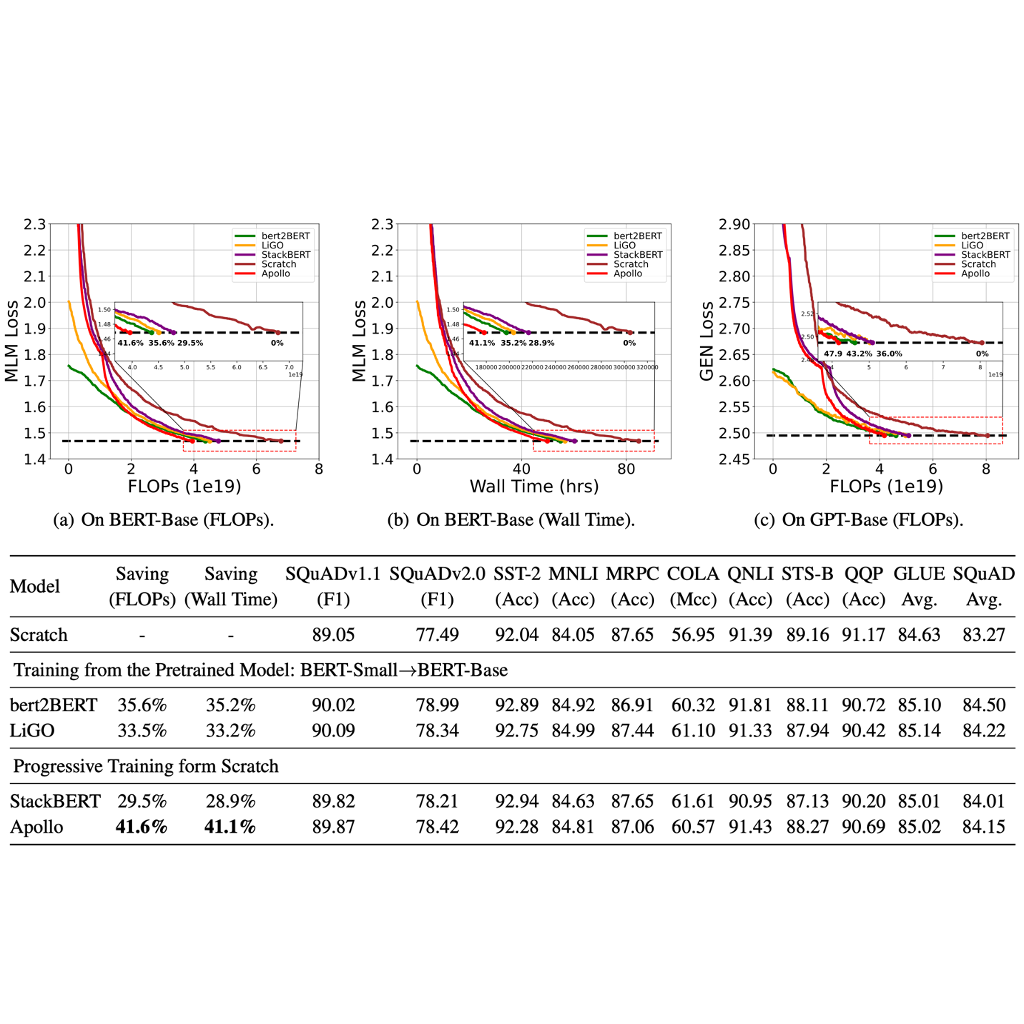

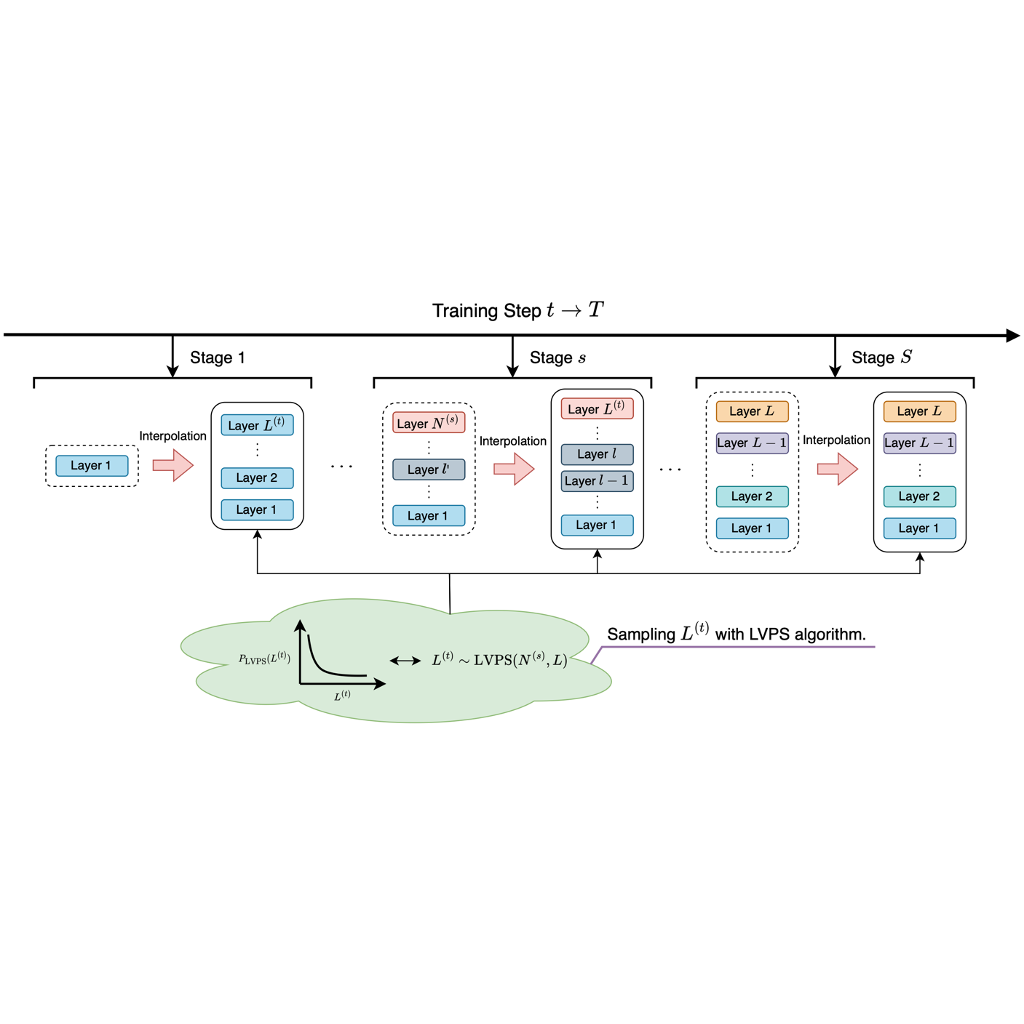

Yu Pan*, Ye Yuan*, Yichun Yin, Jiaxin Shi, Zenglin Xu, Ming Zhang, Lifeng Shang, Xin Jiang, Qun Liu AAAI, 2024 (Oral, Top 10%) arXiv Accelerating the pretraining of language models by employing LVPS to prelearn the functionalities of deeper layers at a reduced resource cost. |

|

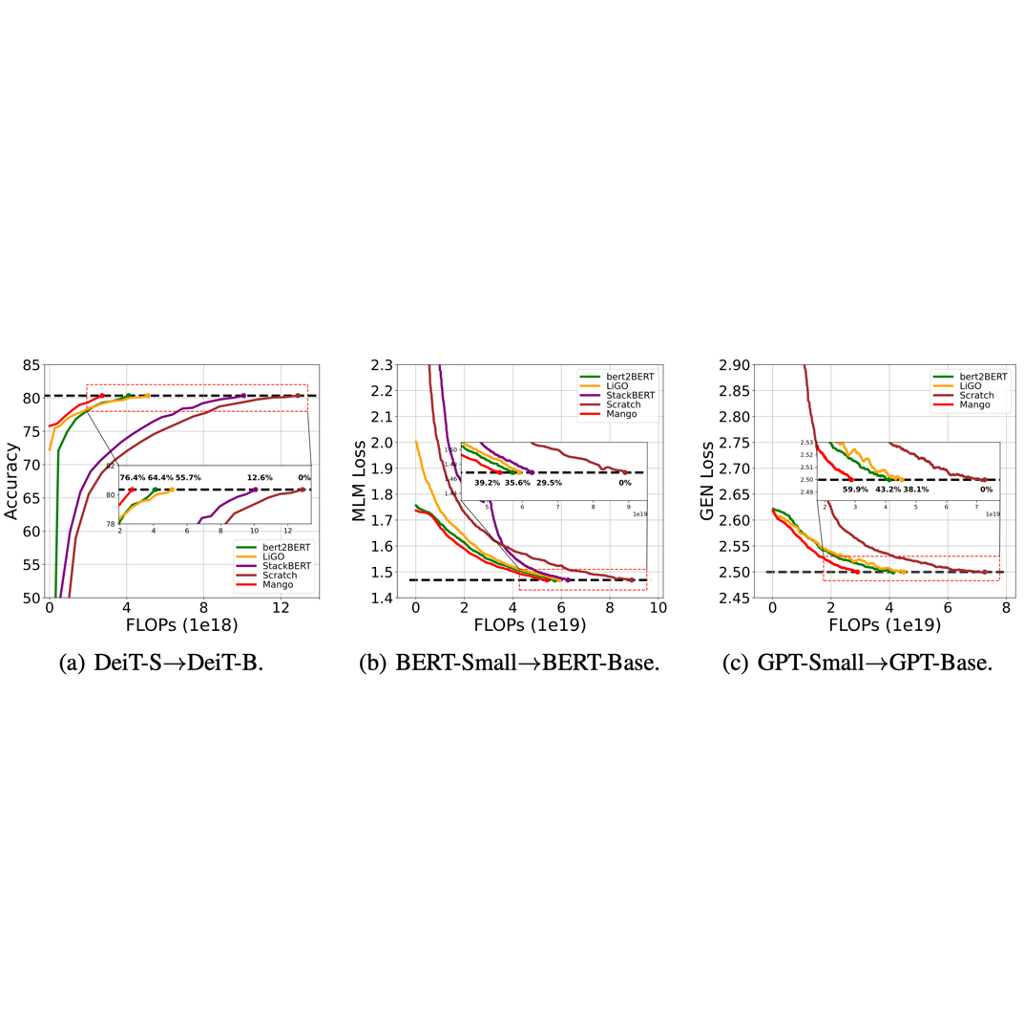

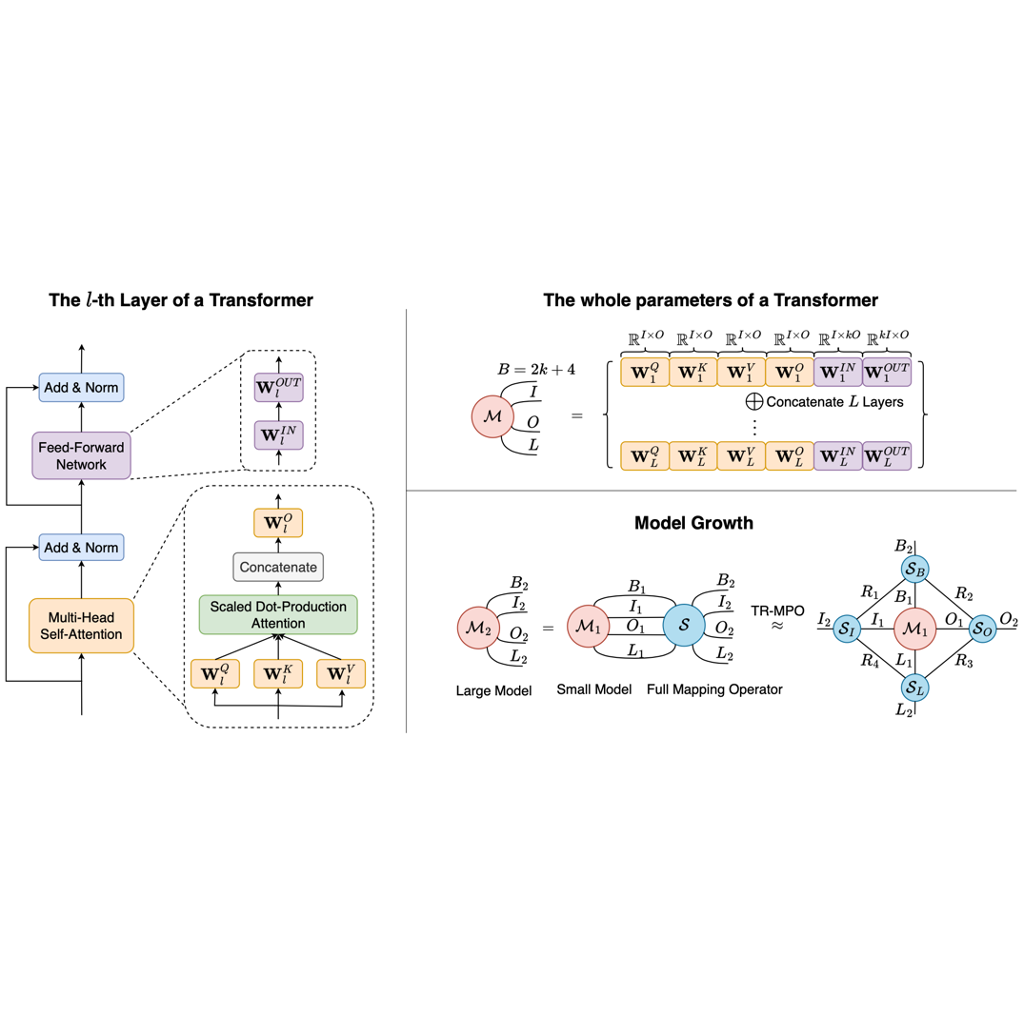

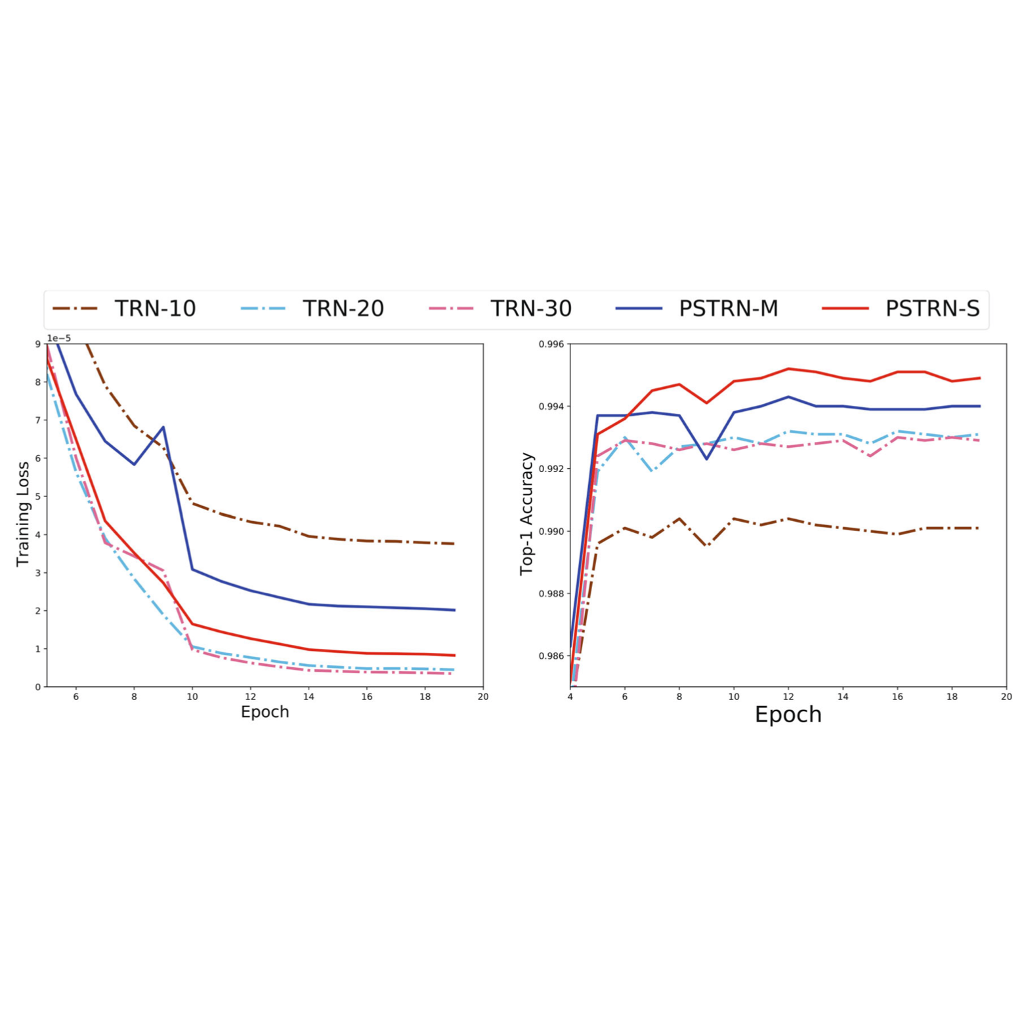

Yu Pan, Ye Yuan, Yichun Yin, Zenglin Xu, Lifeng Shang, Xin Jiang, Qun Liu NeurIPS, 2023 arXiv Utilizing tensor ring matrix product operator (TR-MPO) to grow a small pretrained model to a large counterpart for efficient training. |

|

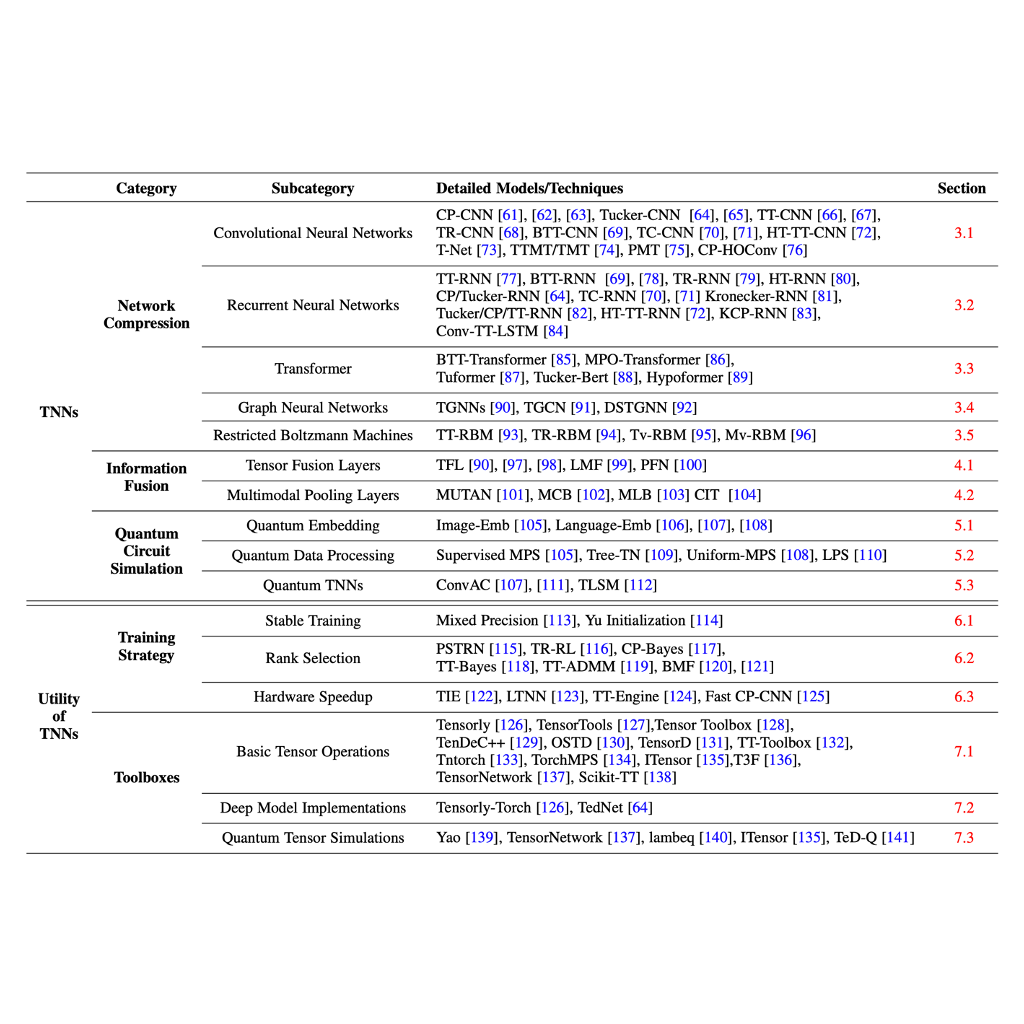

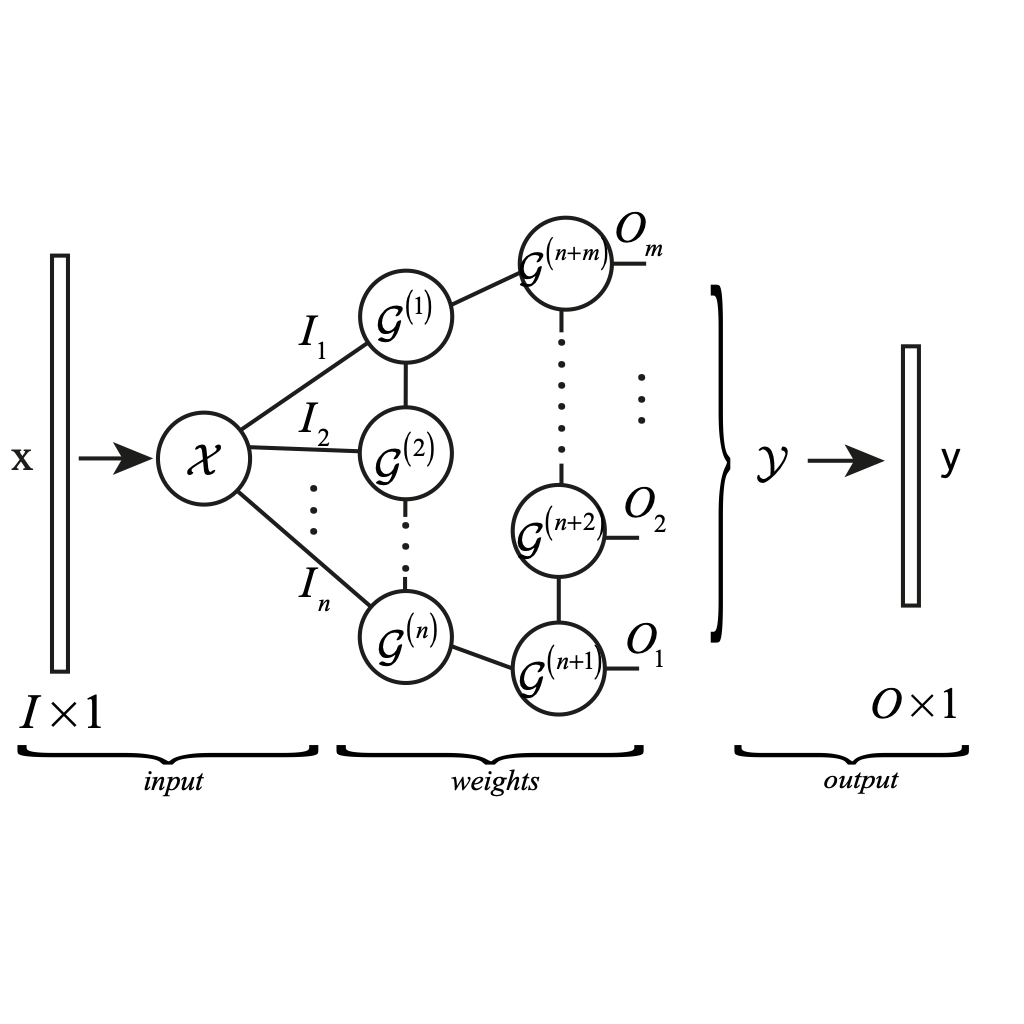

Yu Pan*, Maolin Wang*, Zenglin Xu, Xiangli Yang, Guangxi Li, Andrzej Cichocki Preprint, 2023 arXiv / code A thoroughly investigated survey for tensorial neural networks (TNNs) on network compression, information fusion and quantum circuit simulation. |

|

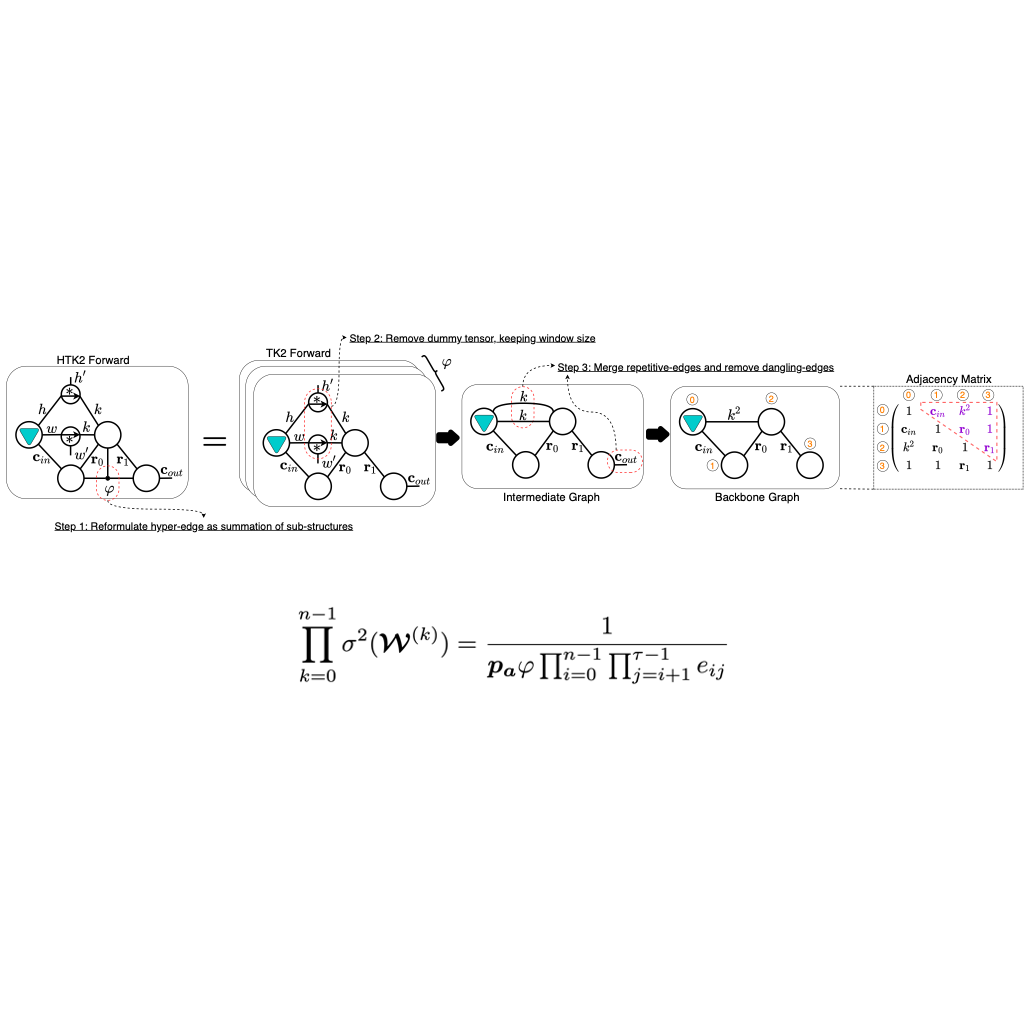

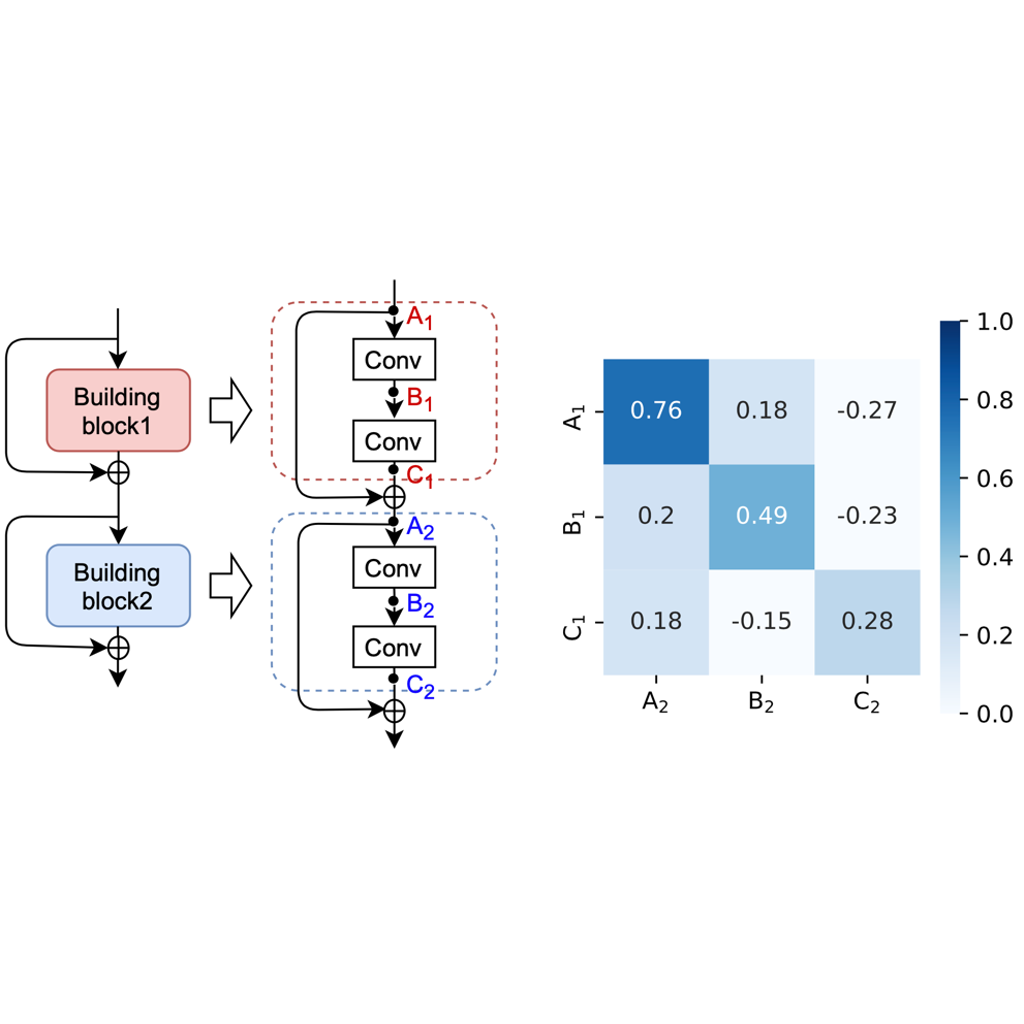

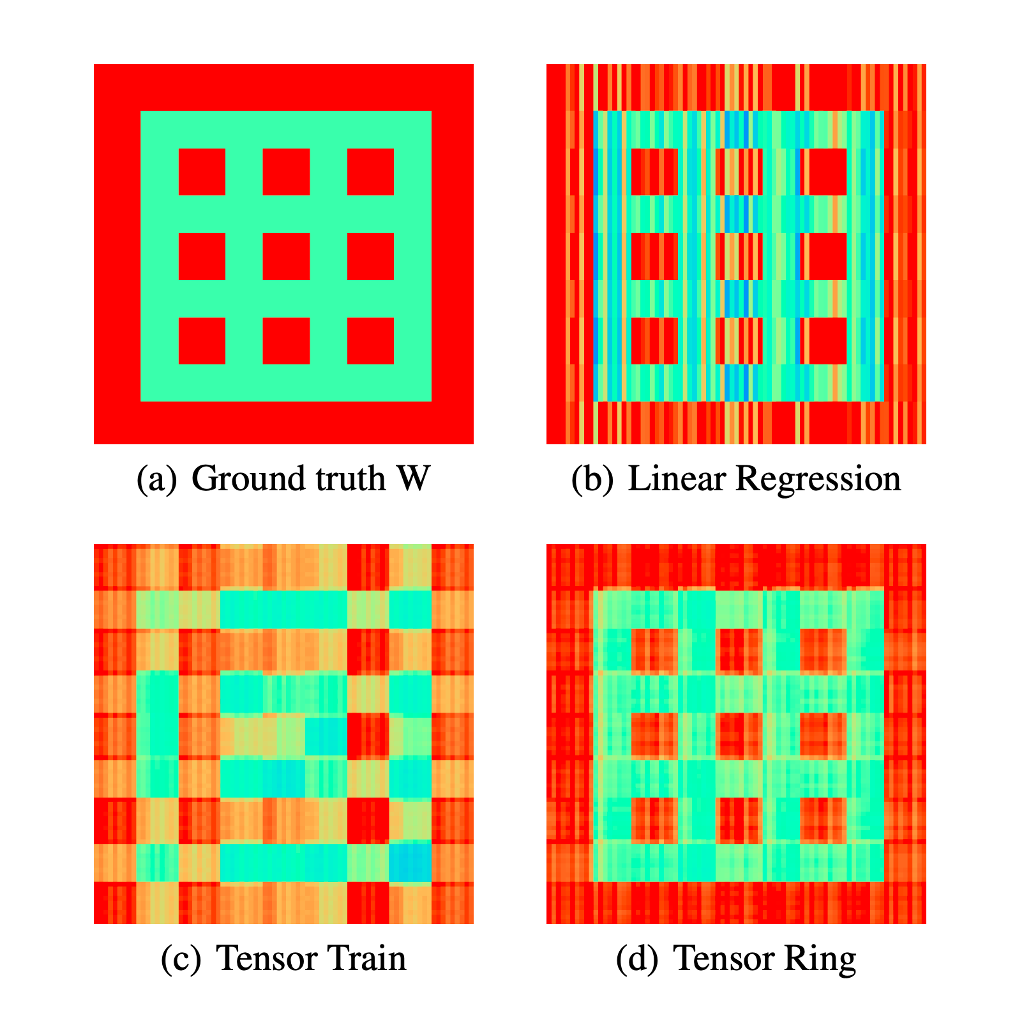

Yu Pan, Zeyong Su, Ao Liu, Jingquan Wang, Nannan Li, Zenglin Xu ICML, 2022 abs / slide / arXiv Calculating suitable variances of weights for arbitrary Tensorial Convolutional Neural Networks (TCNNs). |

|

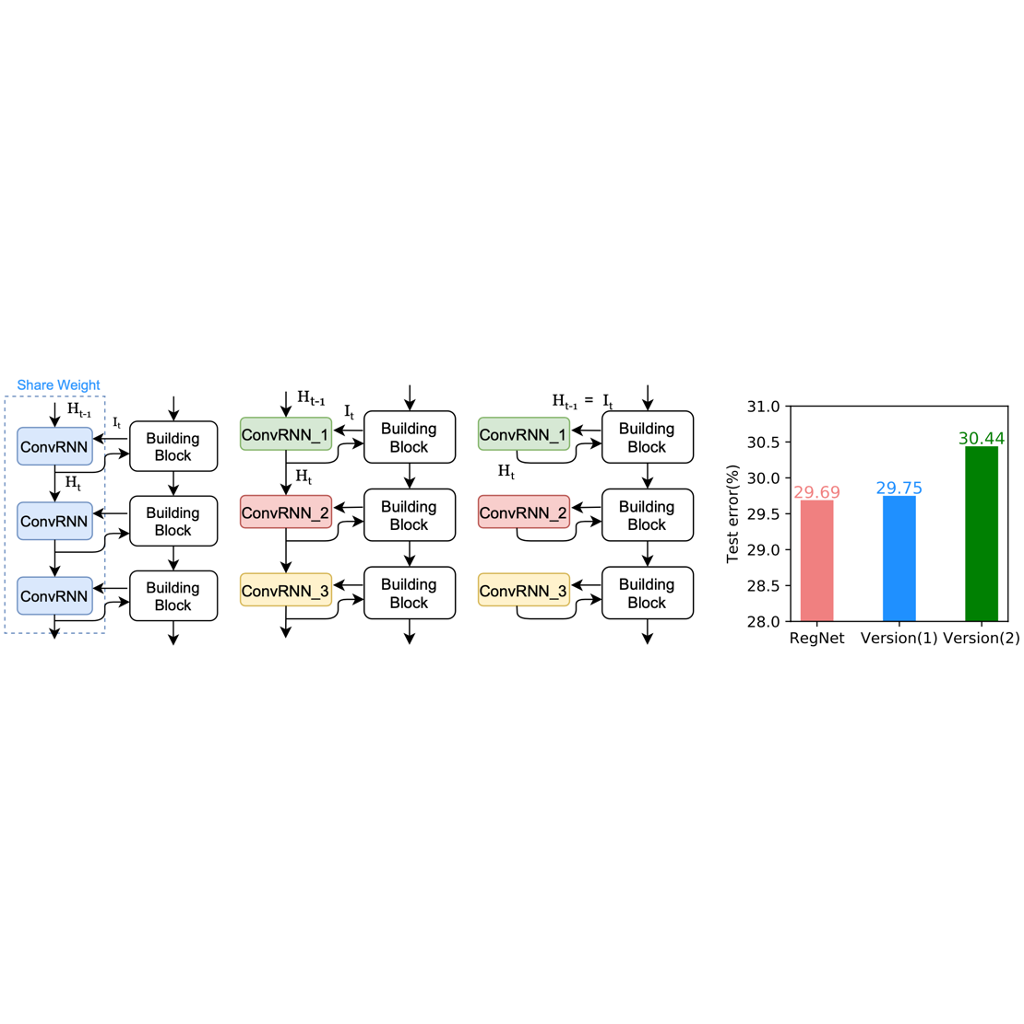

Jing Xu, Yu Pan, Xinglin Pan, Kun Bai, Steven Hoi, Zhang Yi, Zenglin Xu TNNLS, 2022 abs / arXiv Applying recurrent neural networks (RNNs) to regulate convolutional neural networks (CNNs) for performance improvement. |

|

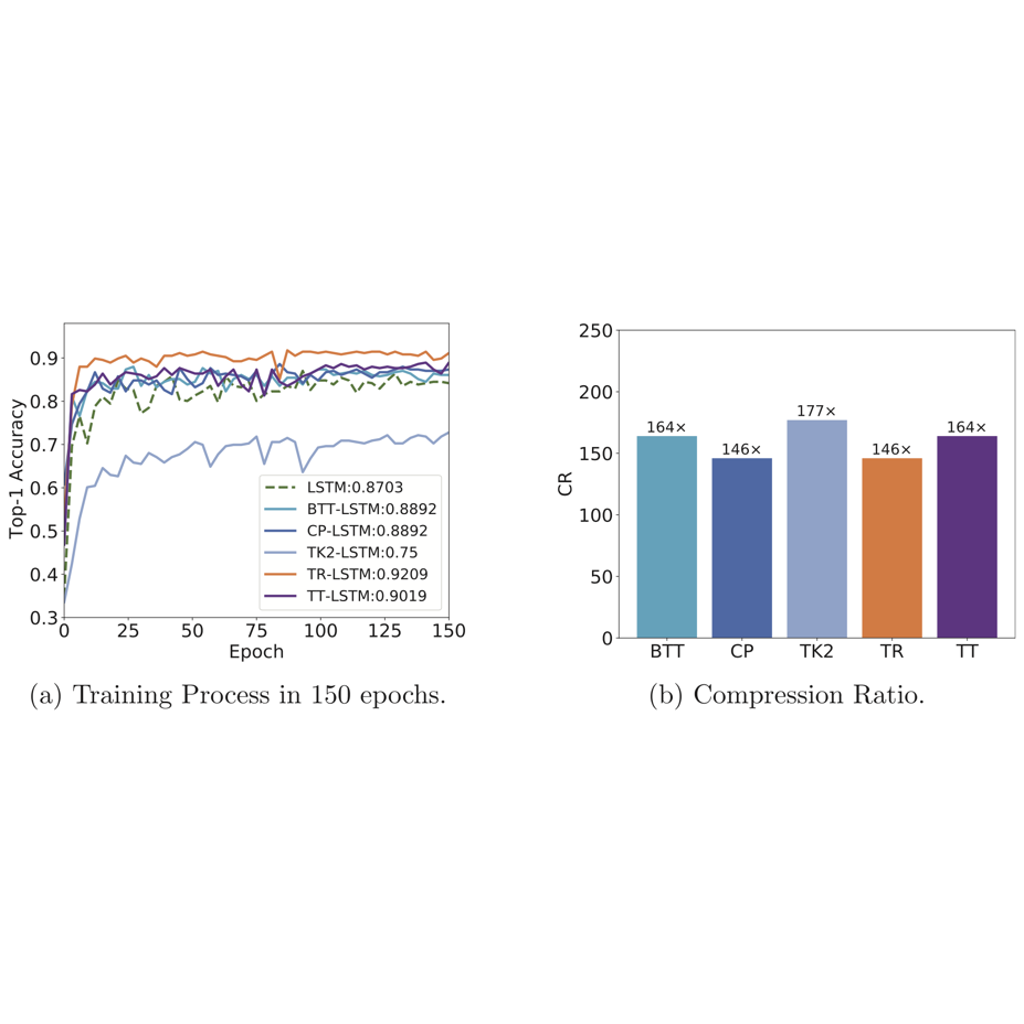

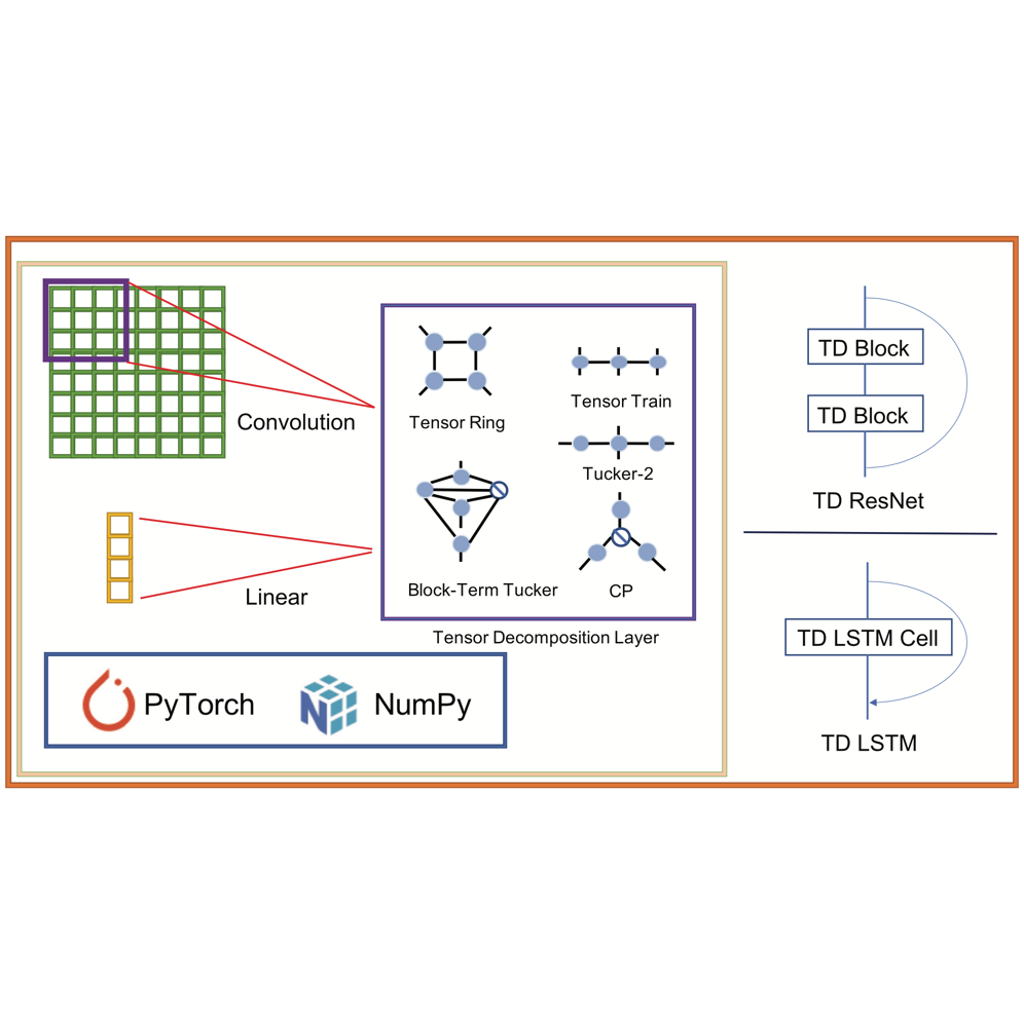

Yu Pan, Maolin Wang, Zenglin Xu Neurocomputing, 2022 abs / arXiv / code A toolkit named TedNet for giving a flexible way to construct Tensor Decomposition Networks (TDNs). |

|

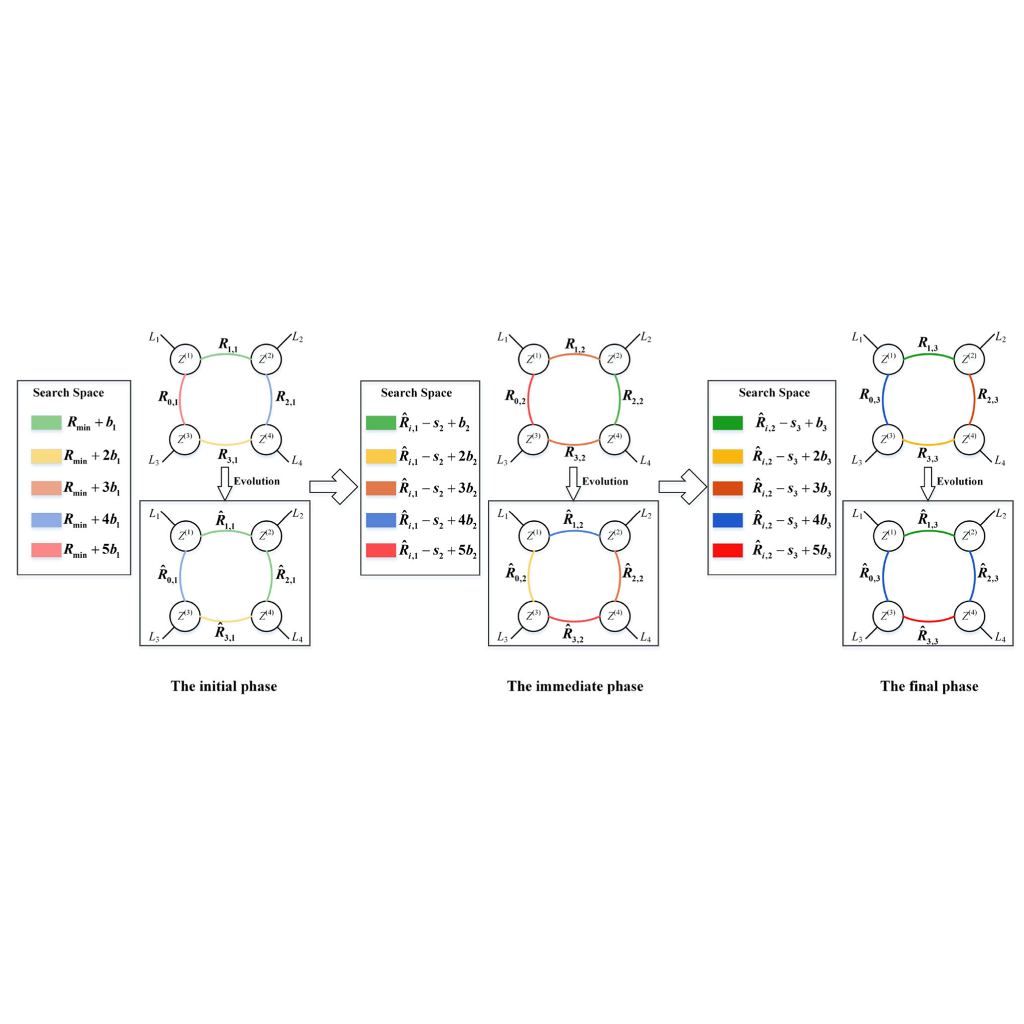

Yu Pan*, Nannan Li*, Yaran Chen, Zixiang Ding, Dongbin Zhao, Zenglin Xu Complex & Intelligent Systems, 2021 abs / arXiv Applying Genetic Algorithm (GA) to search tensor ring based deep models. |

|

Yu Pan, Jing Xu, Maolin Wang, Jinmian Ye, Fei Wang, Kun Bai, Zenglin Xu AAAI, 2019 abs / arXiv / code Utilizing tensor ring decomposition for compressing recurrent neural networks (RNNs) by factorizing the input-to-hidden layer. |

|

|

|

Source code from this website. |